Editorial Board

The Visual Computer

Associated Editor, 2018 - Today

Heritage

Editor, 2022 - Today

Publications

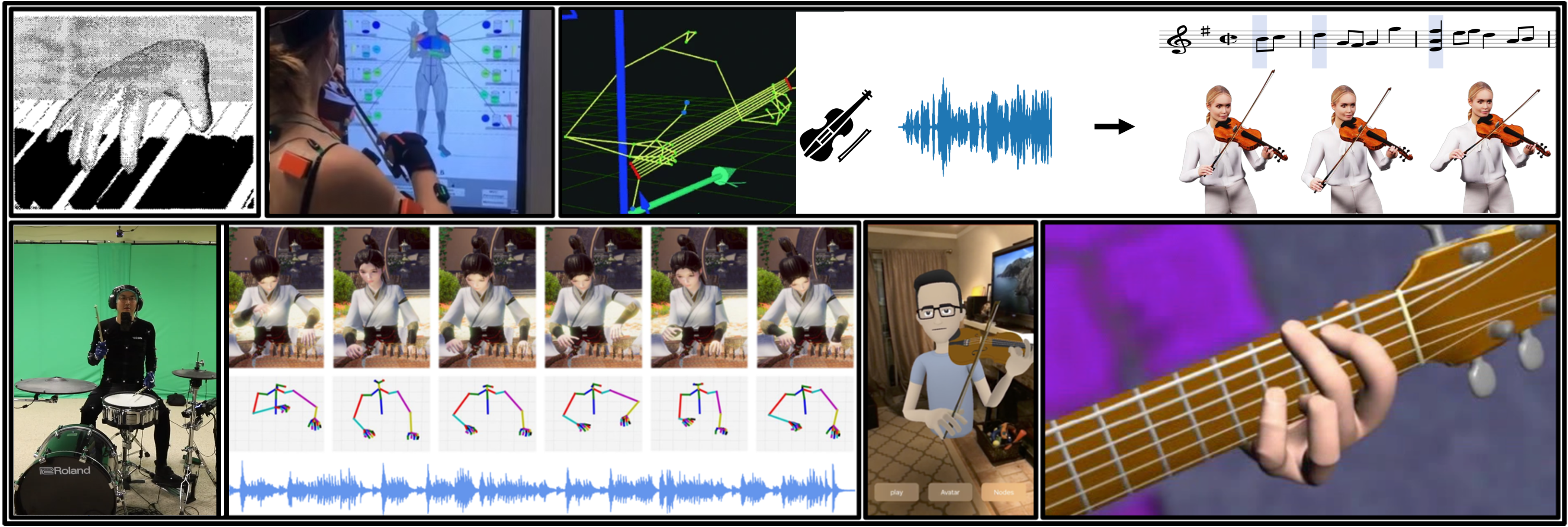

DRUMS: Drummer Reconstruction Using Midi Sequences

Theodoros Kyriakou, Panayiotis Charalambous, Andreas Aristidou

Proceeding of the 18th annual ACM SIGGRAPH conference on Motion, Interaction and Games, MIG 2025, Zurich, Switzerland, December 2025.

DRUMS is a MIDI-driven system that generates expressive, full-body drumming performances, combining precise rhythmic accuracy with realistic hand, stick, upper-body, and facial movements. By integrating BiLSTM-based hand motion prediction, phrase-matched upper-body and facial expressions, and procedural foot control through a modular IK framework, our method produces visually convincing and musically aligned drummer animations for applications in digital performance.

[DOI] [paper] [video] [bibtex] [project page]

Disaster evacuation of the old city of Nicosia

Marios Stylianou, Marios Demetriou, Andreas Aristidou

Progress in Disaster Science, Special Issue: AI, Emerging Technologies, and Immersive Solutions for Disaster and Emergency Response, Elsevier, November, 2025.

Presented at the 8th International Disaster and Risk Conference, IDRC 2025, Nicosia, Cyprus, October 22 - 24, 2025

Best student paper award at IDRC 2025.

This paper presents a digital twin–based simulation of evacuation scenarios in Nicosia’s historic walled Old Town, showing how dense urban morphology, gate blockages, and infrastructure changes affect evacuation efficiency and highlighting the need for coordinated, adaptive flow-management strategies to reduce disaster risks in historic cities.

[DOI] [paper] [bibtex] [project page]

MPACT: Mesoscopic Profiling and Abstraction of Crowd Trajectories

Marilena Lemonari, Andreas Panayiotou, Nuria Pelechano, Theodoros Kyriakou, Yiorgos Chrysanthou, Andreas Aristidou, Panayiotis Charalambous

Computer Graphics Forum, Volume 44, Issue 6, September, 2025.

MPACT is a framework that transforms unlabelled crowd data into controllable simulation parameters using image-based encoding and a parameter prediction network trained on synthetic image–profile pairs. It enables intuitive crowd authoring and behavior analysis, achieving high scores in believability, plausibility, and behavioral fidelity across evaluations and user studies.

CEDRL: Simulating Diverse Crowds with Example-Driven Deep Reinforcement Learning

Andreas Panayiotou, Andreas Aristidou, Panayiotis Charalambous

Computer Graphics Forum, Volume 44, Issue 2, May, 2025.

Presented at Eurographics 2025, EG'2025 proceedings, May, 2025.

This paper introduces CEDRL (Crowds using Example-driven Deep Reinforcement Learning), a framework that models diverse and adaptive crowd behaviors by leveraging multiple datasets and a reward function aligned with real-world observations. The approach enables real-time controllability and generalization across scenarios, showcasing enhanced behavior complexity and adaptability in virtual environments.

[DOI] [pre-print paper] [video] [code] [Supplementary Materials] [bibtex] [project page]

Multi-Modal Instrument Performance: A musical database

Theodoros Kyriakou, Andreas Aristidou, Panayiotis Charalambous

Computer Graphics Forum, Volume 44, Issue 2, May, 2025.

Presented at Eurographics 2025, EG'2025 proceedings, May, 2025.

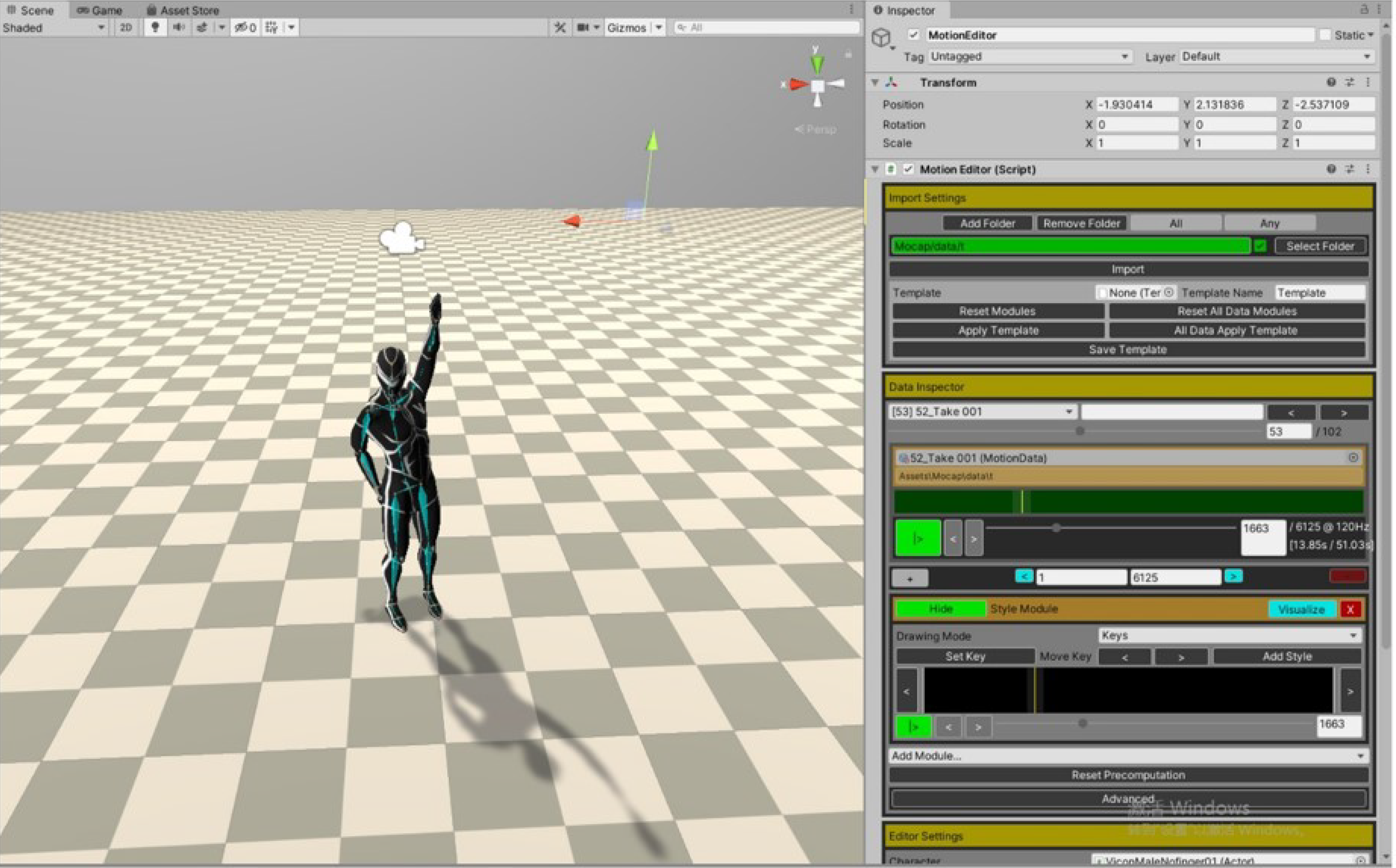

This paper introduces the Multi-Modal Instrument Performances (MMIP) database, the first dataset to combine synchronized high-quality 3D motion capture, audio, video, and MIDI data for musical performances involving guitar, piano, and drums. It highlights the challenges of capturing and managing such multimodal data, offering an open-access repository with tools for exploration, visualization, and playback.

[DOI] [paper] [video] [bibtex] [project page]

DragPoser: Motion Reconstruction from Variable Sparse Tracking Signals via Latent Space Optimization

Jose Luis Pontón, Eduard Pujol, Andreas Aristidou, Carlos Andújar, Nuria Pelechano

Computer Graphics Forum, Volume 44, Issue 2, May, 2025

Presented at Eurographics 2025, EG'2025 proceedings, May, 2025.

DragPoser is a deep-learning-based motion reconstruction system that uses variable sparse sensors as input, achieving real-time high end-effector position accuracy through a pose optimization process within a structured latent space. Incorporating a Temporal Predictor network with a Transformer architecture, DragPoser surpasses traditional methods in precision, producing natural and temporally coherent poses, and demonstrating robustness and adaptability to dynamic constraints and various input configurations.

[DOI] [pre-print paper] [video] [code] [bibtex] [project page]

A novel multidisciplinary approach for reptile movement and behavior analysis

Savvas Zotos, Marilena Stamatiou, Sofia-Zacharenia Marketaki, Duncan J. Irschick, Jeremy A. Bot, Andreas Aristidou, Emily L. C. Shepard, Mark D. Holton, Ioannis N. Vogiatzakis

Integrative Zoology, Accepted, January 2025.

This paper introduces a multidisciplinary approach to studying reptile behavior, combining tri-axial accelerometers, video recordings, motion capture systems, and 3D reconstruction to create detailed digital archives of movements and behaviors. Using two Mediterranean reptiles as case studies, it highlights the potential of this method to advance research on complex and understudied behaviors, offering ecological insights and tools for behavioral analysis.

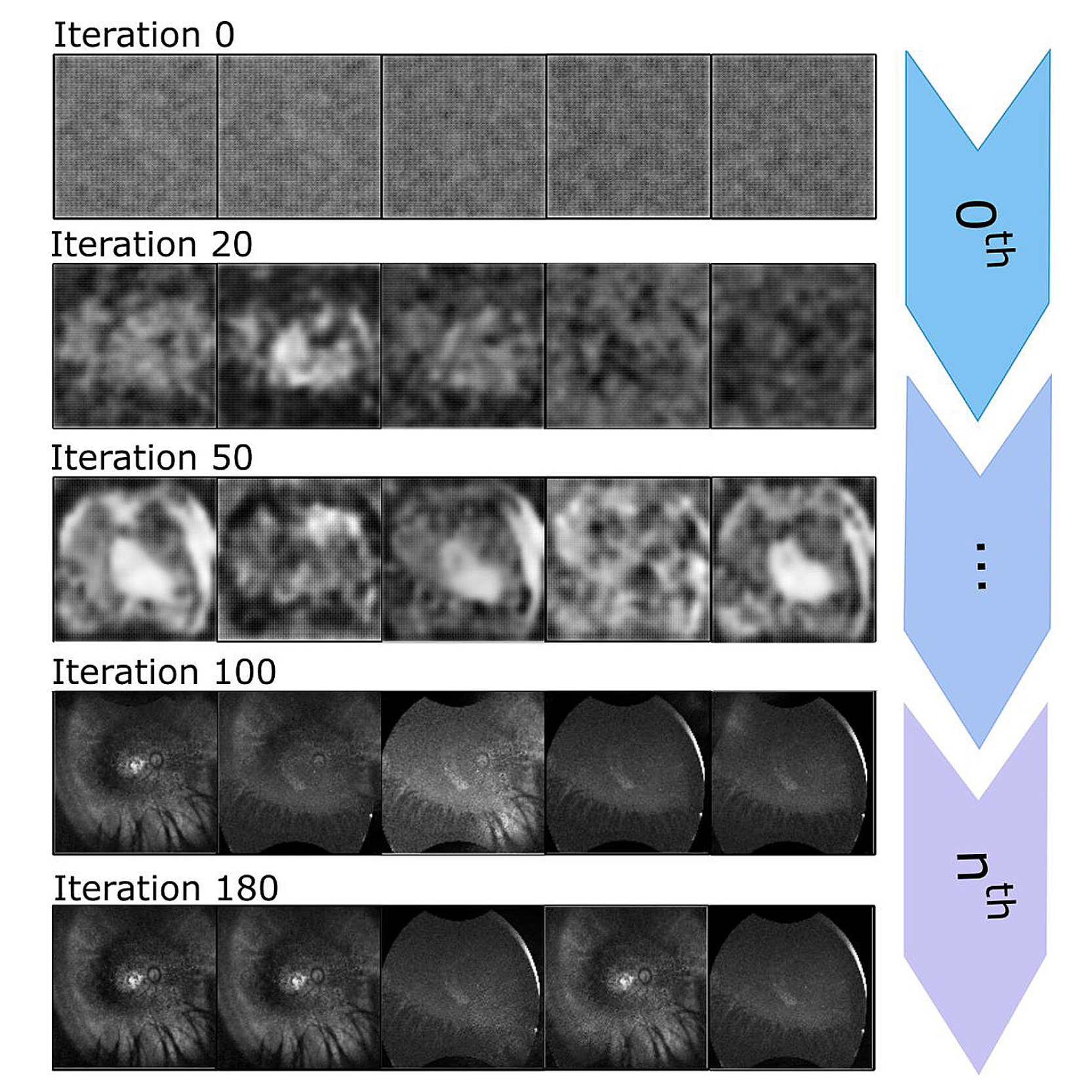

Deep convolutional generative adversarial networks in retinitis pigmentosa disease images augmentation and detection

Paweł Powroźnik, Maria Skublewska-Paszkowska, Katarzyna Nowomiejska, Andreas Aristidou, Andreas Panayides, Robert Rejdak

Advances in Science and Technology Research Journal, Volume 19, no. 2, pages 321-340, 2025.

This study leverages Deep Convolutional Generative Adversarial Networks (DCGAN) and hybrid VGG16-XGBoost techniques to enhance medical datasets, focusing on retinitis pigmentosa, a rare eye condition. The proposed method improves image clarity, dataset augmentation, and detection accuracy, achieving over 90% in key performance metrics and a 19% increase in baseline classification accuracy.

Identifying and Animating Movement of Zeibekiko Sequences by Spatial Temporal Graph Convolutional Network with Multi Attention Modules

Maria Skublewska-Paszkowska, Paweł Powroźnik, Marcin Barszcz, Krzysztof Dziedzic, Andreas Aristidou

Advances in Science and Technology Research Journal, Volume 18, no. 8, pages 217-227, 2024.

This study employs optical motion capture technology to document and translate the Zeibekiko dance into a 3D virtual environment. Using a Spatial Temporal Graph Convolutional Network with Multi Attention Modules (ST-GCN-MAM), the system accurately captures and classifies essential dance sequences by focusing on key body regions, enabling precise, realistic virtual animations with applications in gaming, video production, and digital heritage preservation.

Underwater Virtual Exploration of the Ancient Port of Amathus

Andreas Alexandrou, Filip Skola, Dimitrios Skarlatos, Stella Demesticha, Fotis Liarokapis, Andreas Aristidou

Journal of Cultural Heritage, Volume 70, pages 181-193, November–December 2024.

This work focuses on the digital reconstruction and visualization of underwater cultural heritage, providing a gamified virtual reality (VR) experience of Cyprus' ancient Amathus harbor. Utilizing photogrammetry, our immersive VR environment enables seamless exploration and interaction with this historic site. Advanced features such as guided tours, procedural generation, and machine learning enhance realism and user engagement. User studies validate the quality of our VR experiences, highlighting minimal discomfort and demonstrating promising potential for advancing underwater exploration and conservation efforts.

[DOI] [pre-print paper] [video] [bibtex] [project page]

Design and Implementation of an Interactive Virtual Library based on its Physical Counterpart

Christina-Georgia Serghides, Giorgos Christoforidis, Nikolas Iakovides, Andreas Aristidou

Virtual Reality, Springer, Volume 28, article number 124, June, 2024.

This work explores the creation of a digital replica of a physical Library, using photogrammetry and 3D modelling. A Virtual Reality (VR) platform was developed to immerse users in a virtual library experience, which can also serve as a community and knowledge hub. A perceptual study was conducted to understand the current usage of physical libraries, examine the users’ experience in VR, and identify the requirements and expectations in the development of a virtual library counterpart. Five key usage scenarios were implemented, as a proof-of-concept, with emphasis on 24/7 access, functionality, and interactivity. A user evaluation study endorsed all its key attributes and future viability.

[DOI] [paper] [bibtex] [3D model] [project page]

Virtual Instrument Performances (VIP): A Comprehensive Review

Theodoros Kyriakou, Mercè Álvarez, Andreas Panayiotou, Yiorgos Chrysanthou, Panayiotis Charalambous, Andreas Aristidou

Computer Graphics Forum, Volume 43, Issue 2, April 2024.

Presented at Eurographics 2024, EG'24 STAR papers, April, 2024.

The evolving landscape of performing arts, driven by advancements in Extended Reality (XR) and the Metaverse, presents transformative opportunities for digitizing musical experiences. This comprehensive survey explores the relatively unexplored field of Virtual Instrument Performances (VIP), addressing challenges related to motion capture precision, multi-modal interactions, and the integration of sensory modalities, with a focus on fostering inclusivity, creativity, and live performances in diverse settings.

[DOI] [paper] [bibtex] [project page]

Digitizing Traditional Dances Under Extreme Clothing: The Case Study of Eyo

Temi Ami-Williams, Christina-Georgia Serghides, Andreas Aristidou

Journal of Cultural Heritage, Volume 67, pages 145–157, February, 2024.

The video has been presented at the International Council for Traditional Music (ICTM) 2023 and the Cyprus Dance Film Festival (CDFF) 2023.

This work examines the challenges of capturing movements in traditional African masquerade garments, specifically the Eyo masquerade dance from Lagos, Nigeria. By employing a combination of motion capture technologies, the study addresses the limitations posed by "extreme clothing" and offers valuable insights into preserving cultural heritage dances. The findings lead to an efficient pipeline for digitizing and visualizing folk dances with intricate costumes, culminating in a visually captivating animation showcasing an Eyo masquerade dance performance.

[DOI] [pre-print paper] [video] [promo video] [video:behind the scenes] [bibtex] [project page]

SparsePoser: Real-time Full-body Motion Reconstruction from Sparse Data

Jose Luis Pontón, Haoran Yun, Andreas Aristidou, Carlos Andújar, Nuria Pelechano

ACM Transactions on Graphics, Volume 43, Issue 1, Article No.: 5, pages 1–14.

Presented at SIGGRAPH Asia 2023.

SparsePoser is a novel deep learning-based approach that reconstructs full-body poses using only six tracking devices. The system uses a convolutional autoencoder to generate high-quality human poses learned from motion capture data and a lightweight feed-forward neural network IK component to adjust hands and feet based on the corresponding trackers.

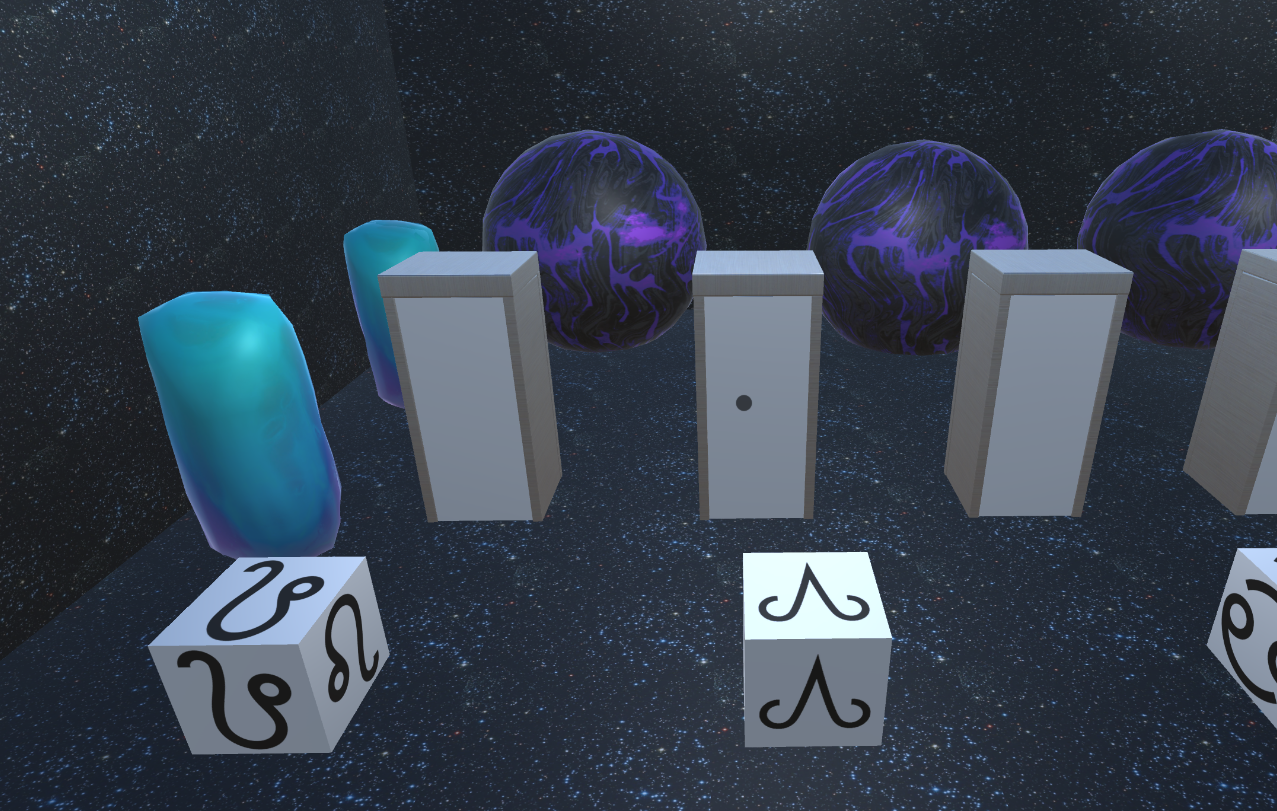

Collaborative VR: Solving riddles in the concept of escape rooms

Afxentis Ioannou, Marilena Lemonari, Fotis Liarokapis, Andreas Aristidou

International Conference on Interactive Media, Smart Systems and Emerging Technologies, IMET 2023.

This work explores alternative means of communication in collaborative virtual environments (CVEs) and their impact on users' engagement and performance. Through a case study of a collaborative VR escape room, we conduct a user study to evaluate the effects of nontraditional communication methods in computer-supported cooperative work (CSCW). Despite the absence of traditional interactions, our study reveals that users can effectively convey messages and complete tasks, akin to real-life scenarios.

Dancing in virtual reality as an inclusive platform for social and physical fitness activities: A survey

Bhuvaneswari Sarupuri, Richard Kulpa, Andreas Aristidou, Franck Multon

The Visual Computer, Volume 40, pages 4055–4070, 2024.

This paper qualitatively evaluates 292 users of a VR dancing platform, exploring their motivations, experiences, and requirements. We employ OpenAI's Artificial Intelligence platform for automatic extraction of response categories. The focus is on VR as an inclusive platform for social and physical dancing activities.

[DOI] [paper] [bibtex] [Survey Questionnaire]

Motion-R^3: Fast and Accurate Motion Annotation via Representation-based Representativeness Ranking

Jubo Yu, Tianxiang Ren, Shihui Guo, Fengyi Fang, Kai Wang, Zijiao Zeng, Yazhan Zhang, Andreas Aristidou, Yipeng Qin

arXiv.org > cs.CV > arXiv:2304.01672

In this work we present a new method for motion annotation based on the representativeness of motion data in a given dataset. Our ranks motion data based on their representativeness in a learned motion representation space. The paper also introduces a dual-level motion contrastive learning method to learn the motion representation space in a more informative way. The proposed method is efficient and can adapt to frequent requirements changes, enabling agile development of motion annotation models.

[DOI] [paper] [video] [bibtex] [project page]

Collaborative Museum Heist with Reinforcement Learning

Eleni Evripidou, Andreas Aristidou, Panayiotis Charalambous

Computer Animation and Virtual Worlds, Volume 34, Issue 3-4, May 2023.

Presented at the 36th International Conference on Computer Animation and Social Agents, CASA'23, May, 2023.

In this paper, we present our initial findings of applying Reinforcement Learning techniques to a museum heist game, where trained robbers with different skills learn to cooperate and maximize individual and team rewards while avoiding detection by scripted security guards and cameras, showcasing the feasibility of training both sides concurrently in an adversarial game setting.

Let's All Dance: Enhancing Amateur Dance Motions

Qiu Zhou, Manyi Li, Qiong Zeng, Andreas Aristidou, Xiaojing Zhang, Lin Chen, Changhe Tu

Computational Visual Media, Vol.9, No.3, September 2023

In this paper, we present a deep model that enhances professionalism to amateur dance movements, allowing the movement quality to be improved in both the spatial and temporal domains. We illustrate the effectiveness of our method on real amateur and artificially generated dance movements. We also demonstrate that our method can synchronize 3D dance motions with any reference audio under non-uniform and irregular misalignment.

[DOI] [paper] [supplementary materials] [video] [code] [data] [bibtex] [project page]

Rhythm is a Dancer: Music-Driven Motion Synthesis with Global Structure

Andreas Aristidou, Anastasios Yiannakidis, Kfir Aberman, Daniel Cohen-Or, Ariel Shamir, Yiorgos Chrysanthou

IEEE Transactions on Visualization and Computer Graphics, Volume 29, Issue 8, August 2023.

Presented at ACM SIGGRAPH/ Eurographics Symposium on Computer Animation, SCA'22, September, 2022.

In this work, we present a music-driven neural framework that generates realistic human motions, which are rich, avoid repetitions, and jointly form a global structure that respects the culture of a specific dance genre. We illustrate examples of various dance genre, where we demonstrate choreography control and editing in a number of applications.

[DOI] [paper] [video] [Dance Motion Database] [bibtex] [project page]

Pose Representations for Deep Skeletal Animation

Nefeli Andreou, Andreas Aristidou, Yiorgos Chrysanthou

Computer Graphics Forum, Volume 41, Issue 8, December 2022.

Presented at ACM SIGGRAPH/ Eurographics Symposium on Computer Animation, SCA'22, September, 2022.

In this work we present an efficient method for training neural networks, specifically designed for character animation. We use dual quaternions as the mathematical framework, and we take advantage of the skeletal hierarchy, to avoid rotation discontinuities, a common problem when using Euler angle or exponential map parameterizations, or motion ambiguities, a common problem when using positional data. Our method does not requires re-projection onto skeleton constraints to avoid bone stretching violation and invalid configurations, while the network is propagated learning using both rotational and positional information.

[DOI] [paper] [video] [code] [bibtex] [project page] [more about dual-quaternions and CGA]

Digitizing Wildlife: The case of reptiles 3D virtual museum

Savvas Zotos, Marilena Lemonari, Michael Konstantinou, Anastasios Yiannakidis, Georgios Pappas, Panayiotis Kyriakou, Ioannis N. Vogiatzakis, Andreas Aristidou

IEEE Computer Graphics and Applications, Feature Article, Volume 42, Issue 5, Sept/Oct. 2022.

In this paper, we design and develop a 3D virtual museum with holistic metadata documentation and a variety of captured reptile behaviors and movements. Our main contribution lies on the procedure of rigging, capturing, and animating reptiles, as well as the development of a number of novel educational applications.

[DOI] [paper] [video] [bibtex] [project page]

Safeguarding our Dance Cultural Heritage

Andreas Aristidou, Alan Chalmers, Yiorgos Chrysanthou, Celine Loscos, Franck Multon, Joseph E. Parkins, Bhuvan Sarupuri, Efstathios Stavrakis

Eurographics Tutorials, April 26, 2022.

In this tutorial, we show how the European Project, SCHEDAR, exploited emerging technologies to digitize, analyze, and holistically document our intangible heritage creations, that is a critical necessity for the preservation and the continuity of our identity as Europeans.

[DOI] [paper] [teaser video] [bibtex] [project page]

Virtual Dance Museums: the case of Greek/Cypriot folk dancing

Andreas Aristidou, Nefeli Andreou, Loukas Charalambous, Anastasios Yiannakidis, Yiorgos Chrysanthou

EUROGRAPHICS Workshop on Graphics and Cultural Heritage, GCH'21, Bournemouth, United Kingdom, November 2021.

This paper presents a virtual dance museum that has been developed to allow for widely educating the public, most specifically the youngest generations, about the story, costumes, music, and history of our dances. The museum is publicly accessible, and also enables motion data reusability, facilitating dance learning applications through gamification.

[DOI] [paper] [bibtex] [project page]

Adult2Child: Motion Style Transfer using CycleGANs

Yuzhu Dong, Andreas Aristidou, Ariel Shamir, Moshe Mahler, Eakta Jain

ACM SIGGRAPH Conference on Motion, Interaction, and Games, MIG'20, October 2020.

This paper presents an effective style translation method that tranfers adult motion capture data to the style of child motion using CycleGANs. Our method allows training on unpaired data using a relatively small number of sequences of child and adult motions that are not required to be temporally aligned. We have also captured high quality adult2child 3D motion capture data that are publicly available for future studies.

[DOI] [paper] [video] [code] [Adult2Child Motion Database] [bibtex] [project page]

MotioNet: 3D Human Motion Reconstruction from Monocular Video with Skeleton Consistency

Mingyi Shi, Kfir Aberman, Andreas Aristidou, Taku Komura, Dani Lischinski, Daniel Cohen-Or, Baoquan Chen

ACM Transaction on Graphics, 40(1), Article 1, 2020.

Presented at SIGGRAPH Asia 2020.

MotioNet is a deep neural network that directly reconstructs the motion of a 3D human skeleton from monocular video. It decomposes sequences of 2D joint positions into two separate attributes: a single, symmetric, skeleton, encoded by bone lengths, and a sequence of 3D joint rotations associated with global root positions and foot contact labels. We show that enforcing a single consistent skeleton along with temporally coherent joint rotations constrains the solution space, leading to a morerobust handling of self-occlusions and depth ambiguities.

Salsa dance learning evaluation and motion analysis in gamified virtual reality environment

Simon Senecal, Niels A. Nijdam, Andreas Aristidou, Nadia Magnenat-Thalmann

Multimedia Tools and Applications, 79 (33-34): 24621-24643, September 2020.

We propose an interactive learning application in the form of a virtual reality game, that aims to help users to improve their salsa dancing skills. The application consists of three components, a virtual partner with interactive control to dance with, visual and haptic feedback, and a game mechanic with dance tasks. Learning is evaluated and analyzed using Musical Motion Features and the Laban Motion Analysis system, prior and after training, showing convergence of the profile of non-dancer toward the profile of regular dancers, which validates the learning process.

[DOI] [paper] [video] [bibtex] [project page]

Digital Dance Ethnography: Organizing Large Dance Collections

Andreas Aristidou, Ariel Shamir, Yiorgos Chrysanthou

ACM Journal on Computing and Cultural Heritage, 12(4), Article 29, 2019.

This paper presents a method for contextually motion analysis that organizes dance data semantically, to form the first digital dance ethnography. The method is capable of exploiting the contextual correlation between dances, and distinguishing fine-grained differences between semantically similar motions. It illustrates a number of different organization trees, and portrays the chronological and geographical evolution of dances.

[DOI] [paper] [video] [Dance Motion Database] [bibtex] [project page]

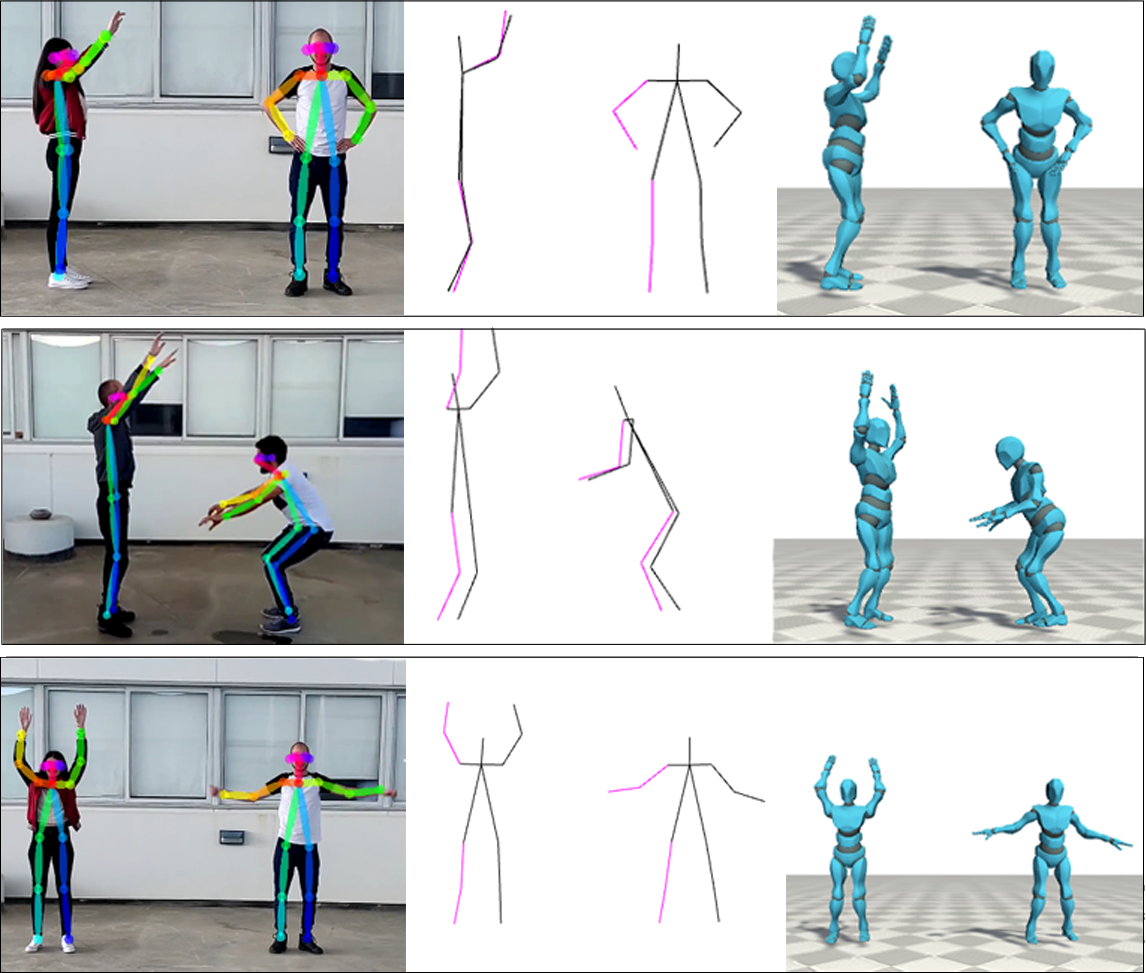

Real-time 3D Human Pose and Motion Reconstruction from Monocular RGB Videos

Anastasios Yiannakides, Andreas Aristidou, Yiorgos Chrysanthou

Comp. Animation & Virtual Worlds, 30(3-4), 2019.

Proceedings of Computer Animation and Social Agents - CASA'19

In this paper, we present a method that reconstructs articulated human motion, taken from a monocular RGB camera. Our method fits 2D deep estimated poses of multiple characters, with the 2D multi-view joint projections of 3D motion data, to retrieve the 3D body pose of the tracked character. By taking into consideration the temporal consistency of motion, it generates natural and smooth animations, in real-time, without bone length violations.

[DOI] [paper] [video] [bibtex] [project page]

Deep Motifs and Motion Signatures

Andreas Aristidou, Daniel Cohen-Or, Jessica K. Hodgins, Yiorgos Chrysanthou, Ariel Shamir

ACM Transaction on Graphics, 37(6), Article 187, 2018.

Proceedings of SIGGRAPH Asia 2018.

We introduce deep motion signatures, which are time-scale and temporal-order invariant, offering a succinct and descriptive representation of motion sequences. We divide motion sequences to short-term movements, and then characterize them based on the distribution of those movements. Motion signatures allow segmenting, retrieving, and synthesizing contextually similar motions.

[DOI] [paper] [video] [Dance Motion Database] [bibtex] [project page]

Self-similarity Analysis for Motion Capture Cleaning

Andreas Aristidou, Daniel Cohen-Or, Jessica K. Hodgins, Ariel Shamir

Computer Graphics Forum, 37(2): 297-309, 2018.

Proceedings of Eurographics 2018.

Our method automatically analyzes mocap sequences of closely interacting performers based on self-similarity. We define motion-words consisting of short-sequences of joints transformations, and use a time-scale invariant similarity measure that is outlier-tolerant to find the KNN. This allows detecting abnormalities and suggesting corrections.

[DOI] [paper] [video] [bibtex] [project page]

Inverse Kinematics Techniques in Computer Graphics: A Survey

Andreas Aristidou, Joan Lasenby, Yiorgos Chrysanthou, Ariel Shamir

Computer Graphics Forum, 37(6): 35-58, 2018.

Presented at Eurographics 2018 (STAR paper).

In this survey, we present a comprehensive review of the IK problem and the solutions developed over the years from the computer graphics point of view. The most popular IK methods are discussed with regard to their performance, computational cost and the smoothness of their resulting postures, while we suggest which IK family of solvers is best suited for particular problems. Finally, we indicate the limitations of the current IK methodologies and propose future research directions.

[DOI] [paper] [bibtex] [project page]

Style-based Motion Analysis for Dance Composition

Andreas Aristidou, Efstathios Stavrakis, Margarita Papaefthimiou, George Papagiannakis, Yiorgos Chrysanthou

The Visual Computer, 34(12), 1725-1737, 2018.

This work presents a motion analysis and synthesis framework, based on Laban Movement Analysis, that respects stylistic variations and thus is suitable for dance motion synthesis. Implemented in the context of Motion Graphs, it is used to eliminate potentially problematic transitions and synthesize style-coherent animation, without requiring prior labeling of the data.

[DOI] [paper] [video] [Dance Motion Database] [bibtex] [project page]

Emotion Control of Unstructured Dance Movements

Andreas Aristidou, Qiong Zeng, Efstathios Stavrakis, KangKang Yin, Daniel Cohen-Or, Yiorgos Chrysanthou, Baoquan Chen

ACM SIGGRAPH/ Eurographics Symposium on Computer Animation, SCA'17. Eurographics Association, July, 2017.

We present a motion stylization technique suitable for highly expressive mocap data, such as contemporary dances. The method varies the emotion expressed in a motion by modifying its underlying geometric features. Even non-expert users can stylize dance motions by supplying an emotion modification as the single parameter of our algorithm.

[DOI] [paper] [video] [Dance Motion Database] [bibtex] [project page]

Hand Tracking with Physiological Constraints

Andreas Aristidou

The Visual Computer, 34(2): 213-228, 2018.

We present a simple and efficient methodology for tracking and reconstructing 3D hand poses. Using an optical motion capture system, where markers are positioned at strategic points, we manage to acquire the movement of the hand and establish its orientation using a minimum number of markers. An Inverse Kinematics solver was then employed to control the postures of the hand, subject to physiological constraints that restrict the allowed movements to a feasible and natural set.

[DOI] [paper] [video] [bibtex] [project page]

Continuous body emotion recognition system during theater performances

Simon Senecal, Louis Cuel, Andreas Aristidou, Nadia Magnenat-Thalmann

Comp. Animation & Virtual Worlds, 27(3-4): 311-320, 2016.

Proceedings of Computer Animation and Social Agents - CASA'16

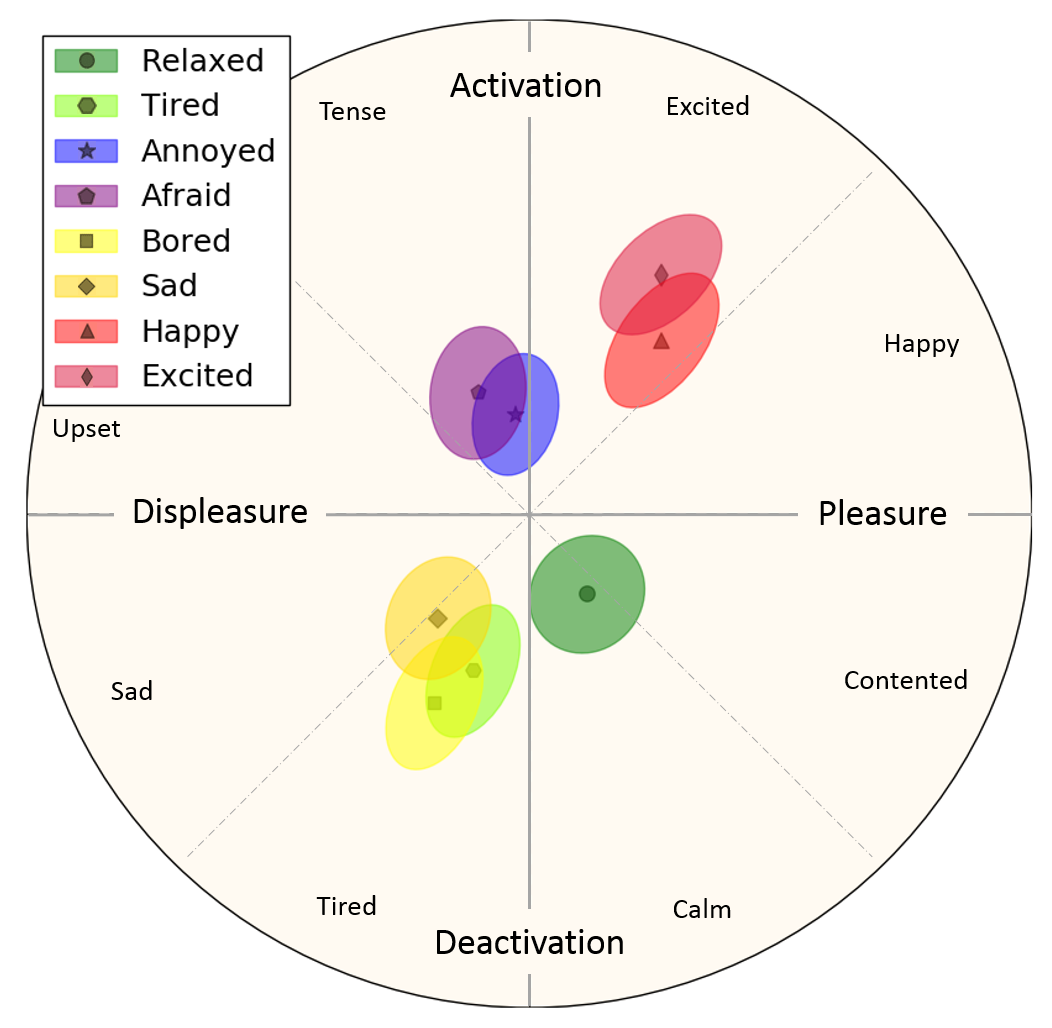

We propose a system for continuous emotional behavior recognition expressed by people during communication based on their gesture and their whole body dynamical motion. The features used to classify the motion are inspired by the Laban Movement Analysis. Using a trained neural network and annotated data, our system is able to describe the motion behavior as trajectories on the Russell Circumplex Model diagram during theater performances over time.

Extending FABRIK with Model Constraints

Andreas Aristidou, Yiorgos Chrysanthou, Joan Lasenby

Comp. Animation & Virtual Worlds, 27(1): 35-57, 2016.

This paper addresses the problem of manipulating articulated figures in an interactive and intuitive fashion for the design and control of their posture using the FABRIK algorithm; the algorithm has been extended to support a variation of different joints and has been evaluated on a humanoid model.

[DOI] [paper] [video] [bibtex] [project page]

Folk Dance Evaluation Using Laban Movement Analysis

Andreas Aristidou, Efstathios Stavrakis, Panayiotis Charalambous, Yiorgos Chrysanthou, Stephania L. Himona

ACM Journal on Computing and Cultural Heritage, 8(4): 1-19, 2015.

Best paper award at EG GCH 2014.

We present a framework based on the principles of Laban Movement Analysis (LMA) that aims to identify style qualities in dance motions, and can be subsequently used for motion comparison and evaluation. We have designed and implemented a prototype virtual reality simulator for teaching folk dances in which users can preview dance segments performed by a 3D avatar and repeat them. The user’s movements are captured and compared to the folk dance template motions; then, intuitive feedback is provided to the user based on the LMA components.

[DOI] [paper] [video] [bibtex] [project page]

Emotion analysis and classification: Understanding the performers’ emotions using LMA entities

Andreas Aristidou, Panayiotis Charalambous, Yiorgos Chrysanthou

Computer Graphics Forum, 34(6): 262–276, 2015.

Presented at Eurographics 2016.

We proposed a variety of features that encode characteristics of motion, in terms of Laban Movement Analysis, for motion classification and indexing purposes. Our framework can be used to extract both the body and stylistic characteristics, taking into consideration not only the geometry of the pose but also the qualitative characteristics of the motion. This work provides some insights on how people express emotional states using their body, while the proposed features can be used as alternative or complement to the standard similarity, motion classification and synthesising methods.

[DOI] [paper] [video] [Dance Motion Database] [bibtex] [project page]

Cypriot Intangible Cultural Heritage: Digitizing Folk Dances

Andreas Aristidou, Efstathios Stavrakis, Yiorgos Chrysanthou

Cyprus Computer Society journal, Issue 25, pages 42-49, 2014.

We aim to preserve the Cypriot folk dance heritage, creating a state-of-the-art publicly accessible digital archive of folk dances. Our dance library, apart from the rare video materials that are commonly used to document dance performances, utilises three dimensional motion capture technologies to record and archive high quality motion data of expert dancers.

[paper] [bibtex] [project page]

Emotion Recognition for Exergames using Laban Movement Analysis

Haris Zacharatos, Christos Gatzoulis, Yiorgos Chrysanthou, Andreas Aristidou

ACM SIGGRAPH Conference on Motion in Games, MIG'13, Ireland, November 7-9, 2013.

Exergames currently lack the ability to detect and adapt to players' emotional states to enhance the user experience. We propose using body motion features based on Laban Movement Analysis (LMA) to classify four emotional states—concentration, meditation, excitement, and frustration—with high accuracy, as demonstrated by experimental results.

Real-time marker prediction and CoR estimation in optical motion capture

Andreas Aristidou, Joan Lasenby

The Visual Computer, 29 (1): 7-26, 2013.

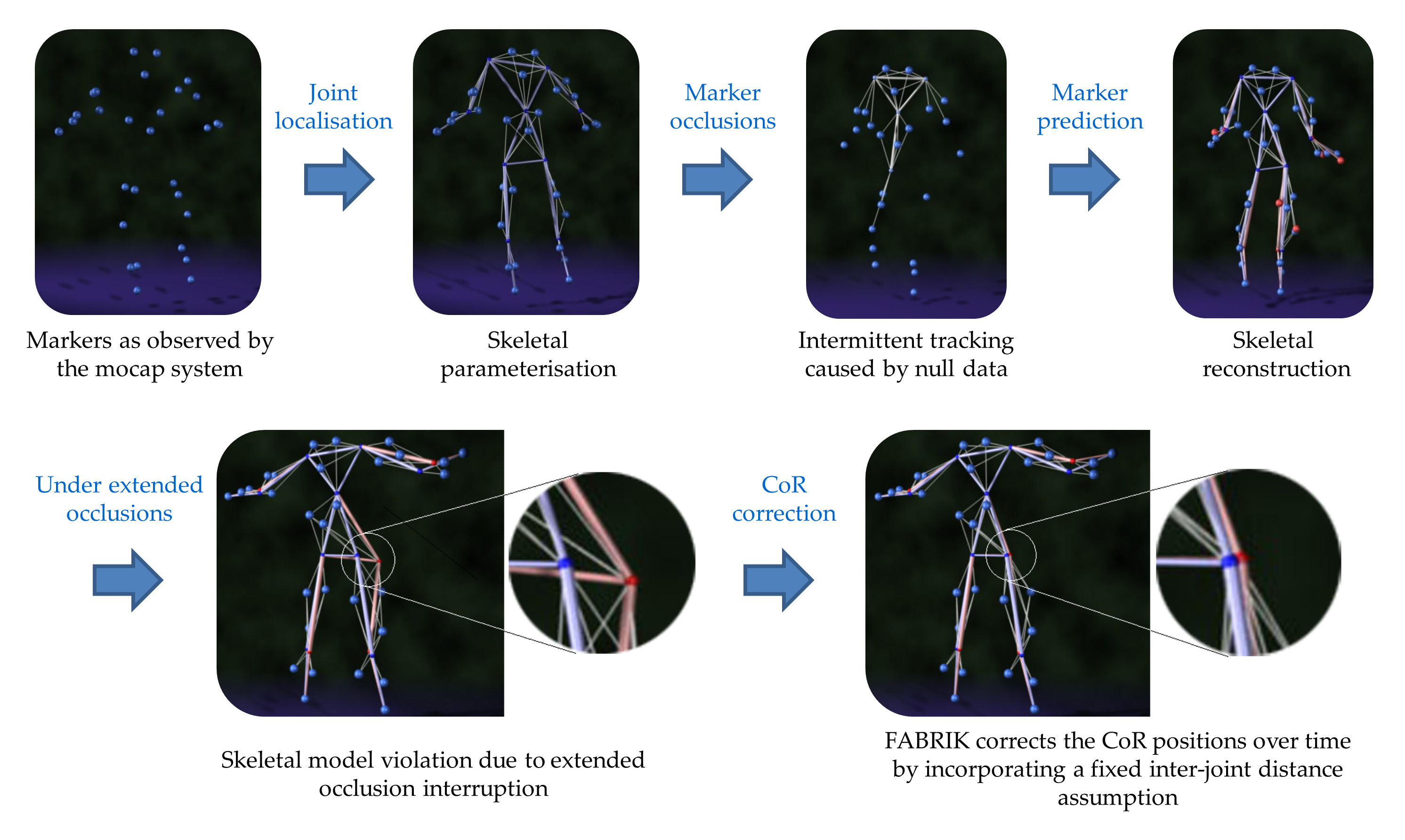

An integrated framework is presented which predicts the occluded marker positions using a Variable Turn Model within an Unscented Kalman filter. Inferred information from neighbouring markers is used as observation states; these constraints are efficient, simple, and real-time implementable. An Inverse Kinematics solver is then applied ensuring that the bone lengths remain constant over time; the system can thereby maintain a continuous data-flow.

[DOI] [paper] [video] [bibtex] [project page]

FABRIK: a fast, iterative solver for the inverse kinematics problem

Andreas Aristidou, Joan Lasenby

Graphical Models, 73(5): 243-260, 2011

A novel heuristic method, called Forward And Backward Reaching Inverse Kinematics (FABRIK), is described that avoids the use of rotational angles or matrices, and instead finds each joint position via locating a point on a line. Thus, it converges in few iterations, has low computational cost and produces visually realistic poses. Constraints can easily be incorporated within FABRIK and multiple chains with multiple end effectors are also supported.

[DOI] [paper] [video] [bibtex] [project page]

Other Publications

Books

Interactive Media for Cultural Heritage

Editors: Fotis Liarokapis, Maria Shehade, Andreas Aristidou, Yiorgos Chrysanthou

Springer Series on Cultural Computing, Springer Cham, 2025.

This edited book explores the latest advancements in interactive media applied to Digital Cultural Heritage research, covering areas from visual data acquisition to immersive experiences like extended reality and digital storytelling. Structured into four sections, it offers theoretical discussions and diverse case studies, making it a valuable resource for academics, scholars, researchers, and students interested in interdisciplinary approaches to cultural heritage preservation and exploration through emerging technologies.

[DOI] [book] [bibtex]Book Chapters

- Motion labelling and recognition: A case study on the Zeibekiko dance

Interactive Media for Cultural Heritage, In F. Liarokapis, M. Shahade, A. Aristidou and Y. Chrysanthou (Eds), Springer Series in Cultural Computing, Springer, 2025

[DOI] [chapter] [bibtex] - Inverse Kinematics solutions using Conformal Geometric Algebra

Guide to Geometric Algebra in Practice, In L. Dorst and J. Lasenby (Eds), pages 47-62, Springer Verlag, 2011.

[DOI] [chapter] [ppt] [bibtex] [project page]

Conference Proceedings

- Virtual Library in the concept of digital twins

In Proceedings of the International Conference on Interactive Media, Smart Systems and Emerging Technologies, IMET 2022, Limassol, Cyprus, October 4 - 7, 2022.

[DOI] [paper] [bibtex] [3D model] [project page] - LMA-Based Motion Retrieval for Folk Dance Cultural Heritage

In Proceedings of the 5th International Conference on Cultural Heritage (EuroMed'14), LNCS, volume 8740, pages 207-216, Limassol, Cyprus, November 3 – 8, 2014.

[DOI] [paper] [bibtex] - Motion Analysis for Folk Dance Evaluation [Best Paper Award]

In Proceedings of the 12th EUROGRAPHICS Workshop on Graphics and Cultural Heritage (GCH'14), pages 55-64, Darmstadt, Germany, October 5 – 8, 2014.

[DOI] [paper] [bibtex] - Feature extraction for human motion indexing of acted dance performances

In Proceedings of the 9th International Conference on Computer Graphics Theory and Applications (GRAPP'14), pages 277-287, Portugal, January 05-08, 2014.

[DOI] [paper] [bibtex] - Motion indexing of different emotional states using LMA components

In SIGGRAPH Asia Technical Briefs (SA’13), ACM, New York, USA, 21:1-21:4, 2013.

[DOI] [paper] [bibtex] - Digitization of Cypriot Folk Dances

In Proceedings of the 4th International Conference on Progress in Cultural Heritage Preservation (EuroMed'12), LNCS, Volume 7616, pages 404-413, 2012.

[DOI] [paper] [bibtex] - Motion Capture with Constrained Inverse Kinematics for Real-Time Hand Tracking

In IEEE Proceedings of the 4th International Symposium on Communications, Control and Signal Processing (ISCCSP'10), Limassol, Cyprus, May 3-5, 2010.

[DOI] [paper] [bibtex] - Predicting Missing Markers to Drive Real-Time Centre of Rotation Estimation

In Proceedings of the International Conference on Articulated Motion and Deformable Objects (AMDO'08), LNCS, Vol. 5098, pages 238-247, Mallorca, Spain, July 9-11, 2008.

[DOI] [paper] [bibtex] - Real-Time Estimation of Missing Markers in Human Motion Capture

In IEEE Proceedings of the International Conference on Bioinformatics and Biomedical Engineering (iCBBE'08), pages 1343-1346, Shanghai, China, May 16-18, 2008.

[DOI] [paper] [bibtex] - Tracking Multiple Sports Players for Mobile Display

In Proceeding of the International Conference on Image Processing, Computer Vision, and Pattern Recognition (IPCV'07), pages 53-59, Las Vegas, USA, June 25-28, 2007.

[DOI] [paper] [bibtex] - Violent Content Classification using Audio Features

In Proceedings of the Hellenic Artificial Intelligence Conference SETN-06, LNCS, Volume 3955, pages 502-507, Heraklion, Crete, Greece, May 18-20, 2006.

[DOI] [paper] [bibtex]

Short Papers and Posters

- CGA, a mathematical framework for motion continuity in deep neural networks

In Proceedings of the International Conference on Empowering Novel Geometric Algebra for Graphics & Engineering (ENGAGE'20), Geneva, Switzerland, September 2020.

[paper] [bibtex] - Virtual Dance Museum: the case of Cypiot folk dancing

In Proceedings of the International Conference on Emerging Technologies and the Digital Transformation of Museums and Heritage Sites, RISE IMET, Nicosia, Cyprus, June 2020.

[paper] [bibtex] - Adult2Child Age Regression Using CycleGANs

In Proceedings of the ACM Symposium on Applied Perception (SAP'19), Barcelona, Spain, September 19-20, 2019.

[paper] [bibtex] - A Conformal Geometric Algebra framework for Mixed Reality and mobile display

In Proceedings of the 6th Conference on Applied Geometric Algebra in Computer Science and Engineering (AGACSE'15), Barcelona, Spain, July 2015.

[paper] [bibtex]

Final Thesis

- Tracking and Modelling Motion for Biomechanical Analysis

A dissertation submitted to University of Cambridge for the Degree of Doctor of Philosophy, Cambridge, October 2010.

Supervisor: Dr Joan Lasenby, Examiners: Prof William J. Fitzgerald & Prof Adrian Hilton.

[DOI] [paper] [bibtex] - A robust method for tracking sports players for web or mobile display

A dissertation submitted to King's College London for the Degree of Master of Science, London, September 2006.

Supervisor: Prof Hamid Aghvami

[paper] [bibtex] - Reliable indexing and recognition of violent scenes using audio features

Annual journal of the Department of Informatics & Telecommunications, Best dissertations of the year, pages 127-136, Athens, Greece, 2006.

Supervisor: Prof Sergios Theodoridis

[paper] [bibtex]

Technical Reports

- Marker Prediction and Skeletal Reconstruction in Motion Capture Technology

Technical Report (UCY-CS-TR-13-2), University of Cyprus, August 2013.

[paper] [bibtex] - Inverse Kinematics: a review of existing techniques and introduction of a new fast iterative solver

Technical Report (CUEDF-INFENG, TR-632), University of Cambridge, September 2009

[paper] [bibtex] - Methods for Real-time Restoration and Estimation in Optical Motion Capture

Technical Report (CUEDF-INFENG, TR-619), University of Cambridge, January 2009.

[paper] [bibtex]

© 2025 Andreas Aristidou