MotioNet: 3D Human Motion Reconstruction from Monocular Video with Skeleton Consistency

MotioNet: 3D Human Motion Reconstruction from Monocular Video with Skeleton Consistency

Mingyi Shi, Kfir Aberman, Andreas Aristidou, Taku Komura, Dani Lischinski, Daniel Cohen-Or, Baoquan Chen

ACM Transaction on Graphics, 40(1), Article 1, 2020.

Presented at SIGGRAPH Asia 2020.

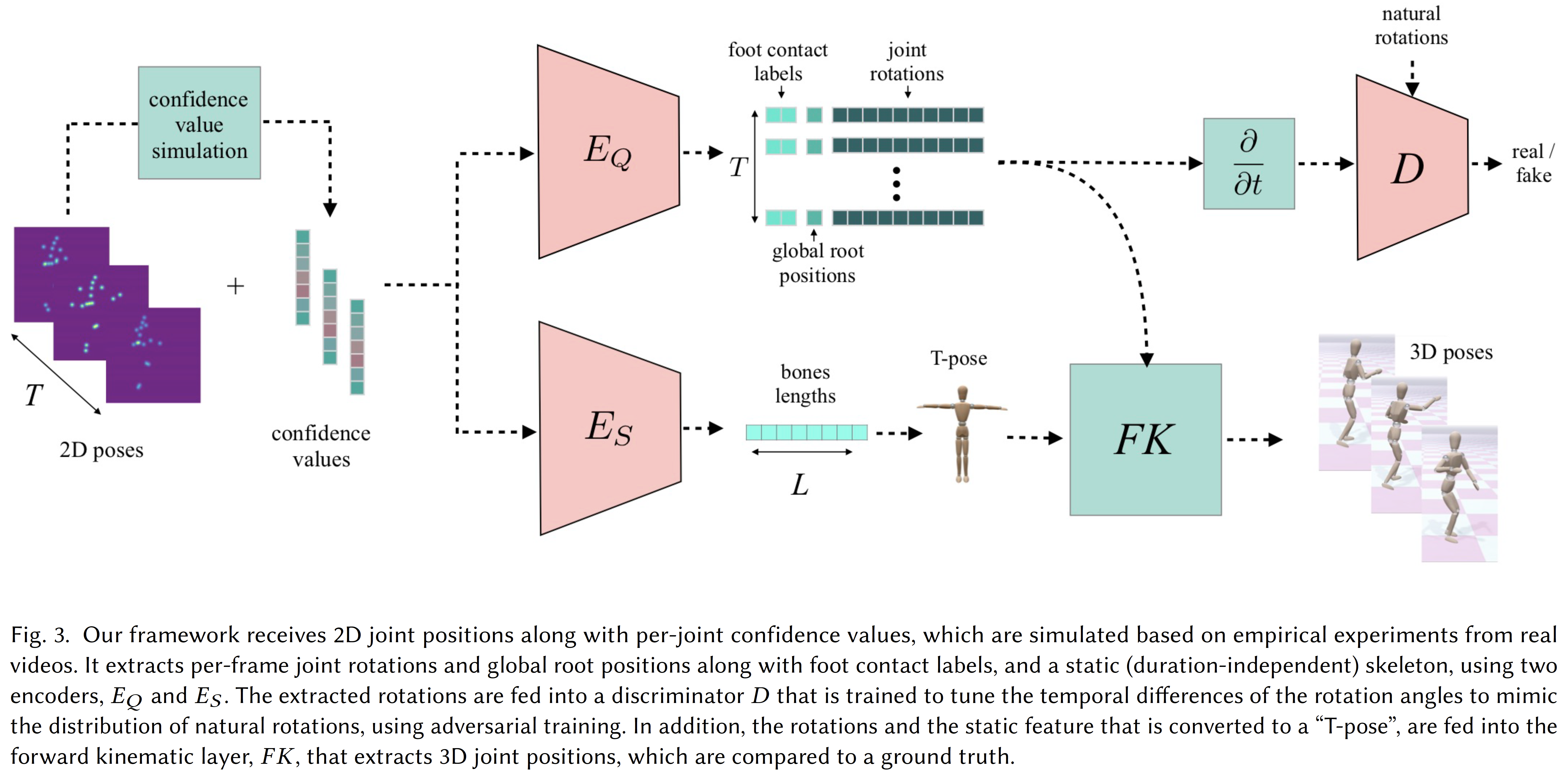

MotioNet is a deep neural network that directly reconstructs the motion of a 3D human skeleton from monocular video. It decomposes sequences of 2D joint positions into two separate attributes: a single, symmetric, skeleton, encoded by bone lengths, and a sequence of 3D joint rotations associated with global root positions and foot contact labels. We show that enforcing a single consistent skeleton along with temporally coherent joint rotations constrains the solution space, leading to a morerobust handling of self-occlusions and depth ambiguities.

Abstract

We introduce MotioNet, a deep neural network that directly reconstructs the motion of a 3D human skeleton from monocular video. While previous methods rely on either rigging or inverse kinematics (IK) to associate a consistent skeleton with temporally coherent joint rotations, our method is the first data-driven approach that directly outputs a kinematic skeleton, which is a complete, commonly used, motion representation. At the crux of our approach lies a deep neural network with embedded kinematic priors, which decomposes sequences of 2D joint positions into two separate attributes: a single, symmetric, skeleton, encoded by bone lengths, and a sequence of 3D joint rotations associated with global root positions and foot contact labels. These attributes are fed into an integrated forward kinematics (FK) layer that outputs 3D positions, which are compared to a ground truth. In addition, an adversarial loss is applied to the velocities of the recovered rotations, to ensure that they lie on the manifold of natural joint rotations. The key advantage of our approach is that it learns to infer natural joint rotations directly from the training data, rather than assuming an underlying model, or inferring them from joint positions using a data-agnostic IK solver. We show that enforcing a single consistent skeleton along with temporally coherent joint rotations constrains the solution space, leading to a more robust handling of self-occlusions and depth ambiguities.

The main contributions of this work include:

- Our network learns to extract a sequence of 3D joint rotations applied to a single 3D skeleton. Thus, IK is effectively integrated within the network, and, consequently, is data-driven (learned),

- Enforcing both a single skeleton and temporally coherent joint rotations not only constrains the solution space, ensuring, consistency as well as leading to a more robust handling of self occlusions and depth ambiguities.

Acknowlegments

This work was supported in part by the National Key R&D Program of China (2018YFB1403900, 2019YFF0302902), the Israel Science Foundation (grant no. 2366/16), and by the European Union’s Horizon 2020 Research and Innovation Programme under Grant Agreement No 739578 and the Government of the Republic of Cyprus through the Directorate General for European Programmes, Coordination and Development

© 2025 Andreas Aristidou