Self-similarity Analysis for Motion Capture Cleaning

Self-similarity Analysis for Motion Capture Cleaning

Andreas Aristidou, Daniel Cohen-Or, Jessica K. Hodgins, Ariel Shamir

Computer Graphics Forum, 37(2): 297-309, 2018.

Proceedings of Eurographics 2018.

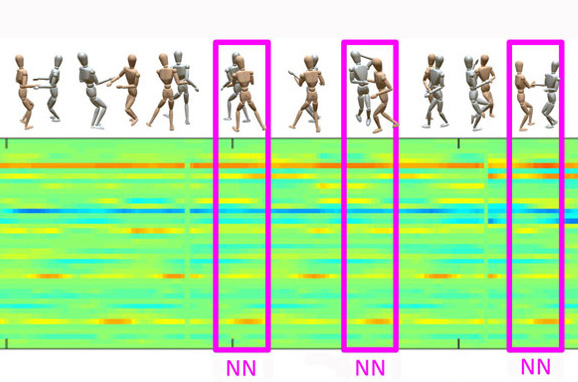

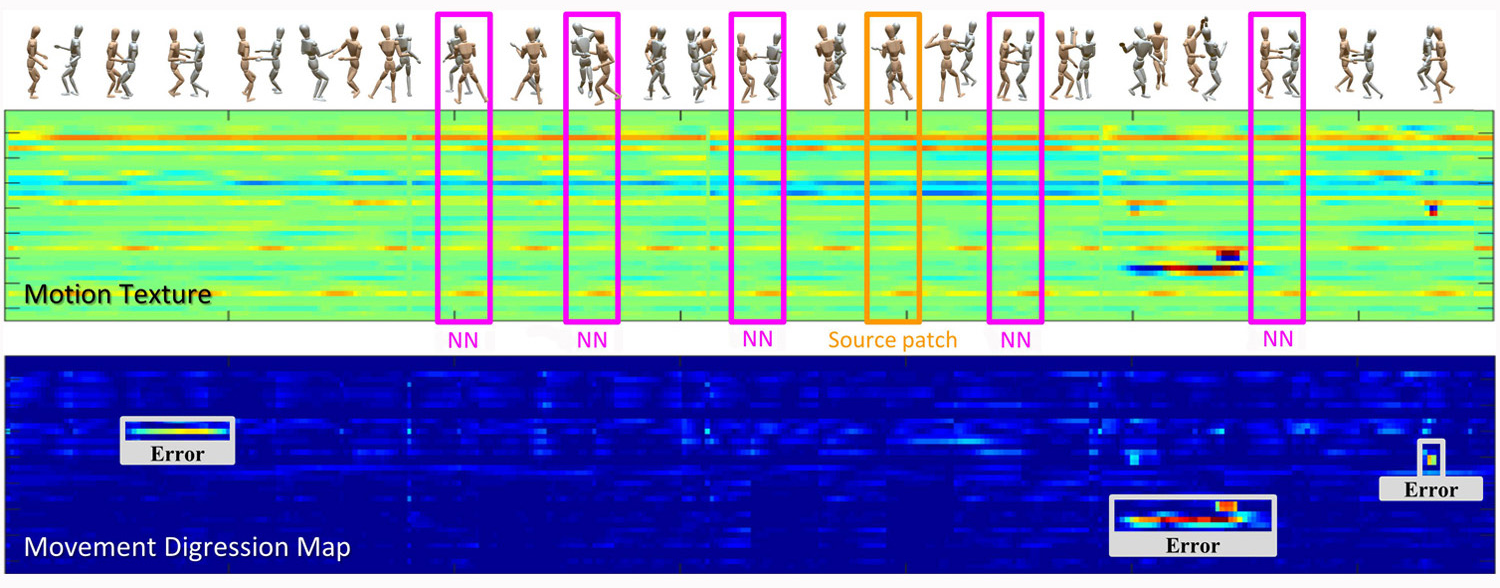

Our method automatically analyzes mocap sequences of closely interacting performers based on self-similarity. We define motion-words consisting of short-sequences of joints transformations, and use a time-scale invariant similarity measure that is outlier-tolerant to find the KNN. This allows detecting abnormalities and suggesting corrections.

[DOI] [paper] [bibtex] [Supplementary Materials]

Abstract

Motion capture sequences may contain erroneous data, especially when performers are interacting closely in complex motion and occlusions are frequent. Common practice is to have professionals visually detect the abnormalities and fix them manually. In this paper, we present a method to automatically analyze and fix motion capture sequences by using self-similarity analysis. The premise of this work is that human motion data has a high-degree of self-similarity. Therefore, given enough motion data, erroneous motions are distinct when compared to other motions. We utilize motion-words that consist of short sequences of transformations of groups of joints around a given motion frame. We search for the K-nearest neighbors (KNN) set of each word using dynamic time warping and use it to detect and fix erroneous motions automatically. We demonstrate the effectiveness of our method in various examples, and evaluate it by comparing to alternative methods and to manual cleaning.

The main contributions of this work include:

- Instead of using absolute marker positions we use joint angles. Joint angles are relative measures that allow more self-similarities to be found in the motion, regardless of the global pose and absolute position of markers.

- Inspired by patch-based self-similarity techniques used in images and video, we do not examine individual motion frames or poses. Instead, we define motion-words as our basic elements for analysis.

- At the core of our self similarity analysis is a time-scale-invariant similarity measure between two motion-words. Since similar motions can vary in duration, as well as have local speed variations, we use dynamic time-warping (DTW) to compare motion-words.

- We build an outlier-tolerant distance measure between motion-words. Our approach does not consider noisy pose parts for reconstructing the erroneous motion and allows a more fine-grained representation of the errors by only replacing the erroneous parts instead of full body poses.

Our Eurographics 2018 Fast Forward Video:

Acknowlegments

This research was supported by the Israel Science Foundation as part of the ISF-NSFC joint program grant number 2216/15.

© 2025 Andreas Aristidou